LLM Generated Feedback

After a session completes, an LLM reviews it and writes objective feedback. You get actionable suggestions without manual triage.

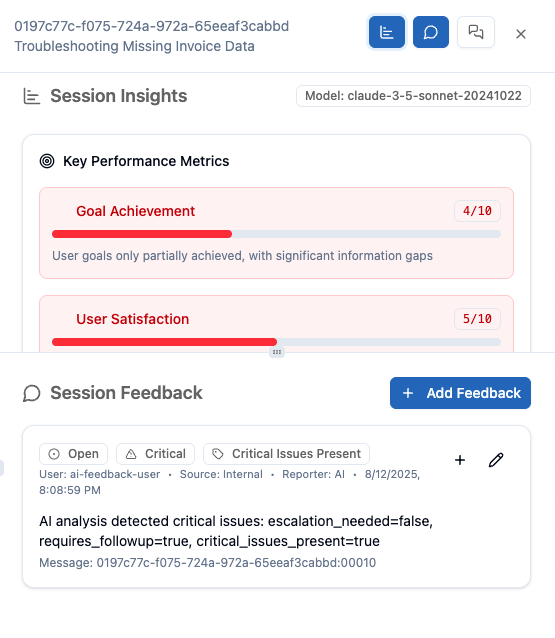

What gets generated

- Summary of the session goal and outcome

- Strengths and issues observed in answers

- Suggestions for prompts, tools, or content

Where it goes

- Stored alongside the session in DynamoDB

- Indexed in OpenSearch for exploration in the Admin Site

Benefits

- Close the loop on quality with minimal effort

- Spot recurring gaps and prioritize fixes

Non-blocking

Feedback is asynchronous and never slows down end‑user chats.

Configuration Required

The LLM Generated Feedback (Verify Response) feature must be enabled in your site-wide pika-config.ts file before chat apps can use it. Without this enablement, feedback generation will not be available regardless of individual chat app settings.

On this page